| Opening the Vocabulary of Egocentric Actions |

|

1National University of Singapore 2Meta Reality Labs Research NeurIPS 2023 |

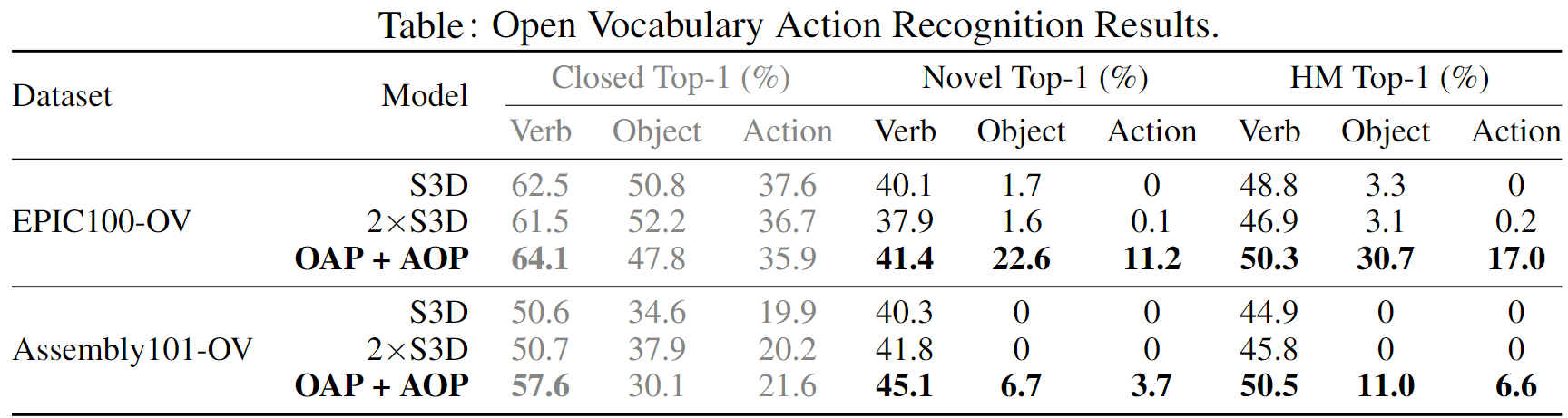

AbstractHuman actions in egocentric videos are often hand-object interactions composed from a verb (performed by the hand) applied to an object. Despite their extensive scaling up, egocentric datasets still face two limitations — sparsity of action compositions and a closed set of interacting objects. This paper proposes a novel open vocabulary action recognition task. Given a set of verbs and objects observed during training, the goal is to generalize the verbs to an open vocabulary of actions with seen and novel objects. To this end, we decouple the verb and object predictions via an object-agnostic verb encoder and a prompt-based object encoder. The prompting leverages CLIP representations to predict an open vocabulary of interacting objects. We create open vocabulary benchmarks on the EPIC-KITCHENS-100 and Assembly101 datasets; whereas closed-action methods fail to generalize, our proposed method is effective. In addition, our object encoder significantly outperforms existing open-vocabulary visual recognition methods in recognizing novel interacting objects. |

|

|

Method |

|

|

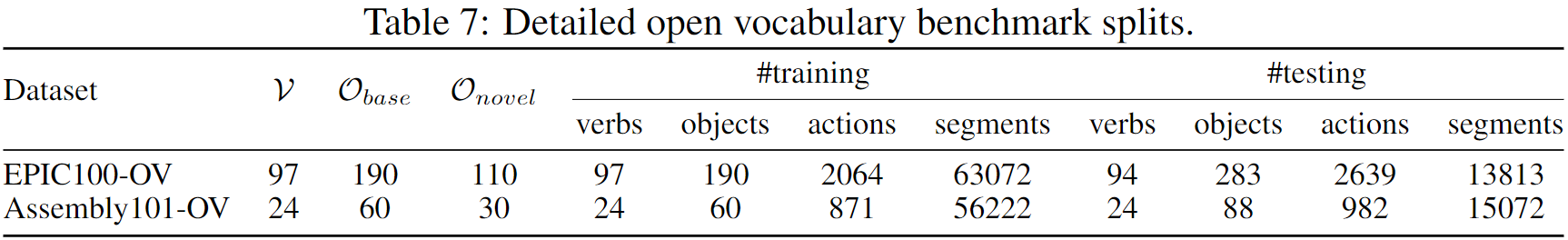

Proposed Bechmark |

|

|

Citation |